1st Semester 2024/25: Program induction and the ARC challenge

- Instructors

- Fausto Carcassi

- ECTS

- 6

- Description

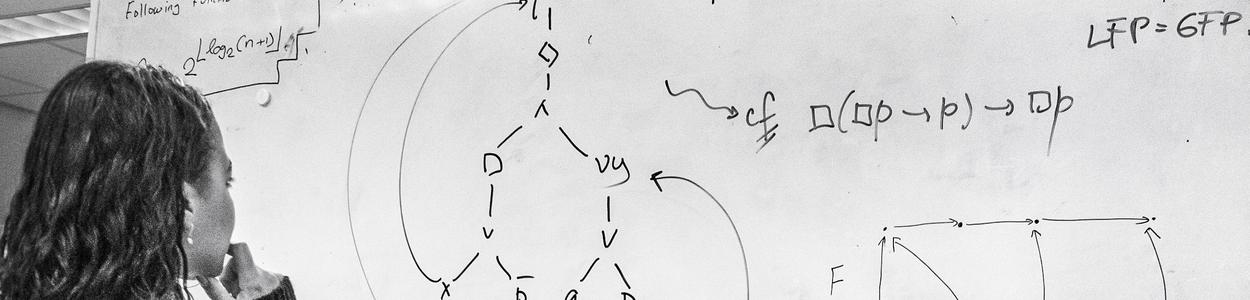

From https://arcprize.org/: "ARC Prize is a $1,000,000+ public competition to beat and open source a solution to the ARC-AGI benchmark." The ARC challenge consists of a set of tasks; In each task, you get a few examples of a rule, and you are given a picture to complete according to the same rule. Humans find most of the ARC tasks easy, but AI remains suprisingly bad at it. ARC problems are program synthesis problems: finding a rule that satisfies certain requirements. This course will start with an overview of current approaches to program induction. Then, we will split into groups and implement our own attempts to solve the ARC challenge. At the end, we will do a little tournament on the public ARC test set. And maybe at the end your algorithm can win one million dollars! This is a hands-on course where students will invent and implement their own algorithms.

- Organisation

In the first week, we will have some introductory lectures covering the ARC challenge, program induction, and some promising appraoches to it. Starting from the second week, students work on their own approaches in groups. In the beginning of the third week, students will present their progress to each other, and will have a (short) meeting with the lecturer to see if they can use any help with their research project. Moreover, the lecturer is available throughout the project for on-demand meetings. During the final week, students will present the outcome of their research project, they will submit a final report, and together we will test the various implementations on the public ARC test set.

- Prerequisites

Programming knowledge and ideally some experience with the relevant computational methods (PCFGs, semantics, program induction).

- Assessment

(Small groups of) students work on a research project, which they present and write a report on. The final (pass/fail) assessment will be based on the presentations and the final report. The assessment will not be based on performance on the ARC test set.

- References

For literature on ARC, see the ARC-AGI resources section at https://arcprize.org/guide. For literature on program induction, see, e.g.:

Ellis, Kevin, et al. "DreamCoder: growing generalizable, interpretable knowledge with wake–sleep Bayesian program learning." Philosophical Transactions of the Royal Society A 381.2251 (2023): 20220050.

Rule, J.S., Piantadosi, S.T., Cropper, A. et al. Symbolic metaprogram search improves learning efficiency and explains rule learning in humans. Nat Commun 15, 6847 (2024). https://doi.org/10.1038/s41467-024-50966-x