2nd Semester 2014/15: Monte Carlo Inference for Probabilistic Context-free Grammars

- Instructors

- Wilker Aziz and Miloš Stanojević

If you are interested in this project, please contact the instructors by email. - ECTS

- 6

- Description

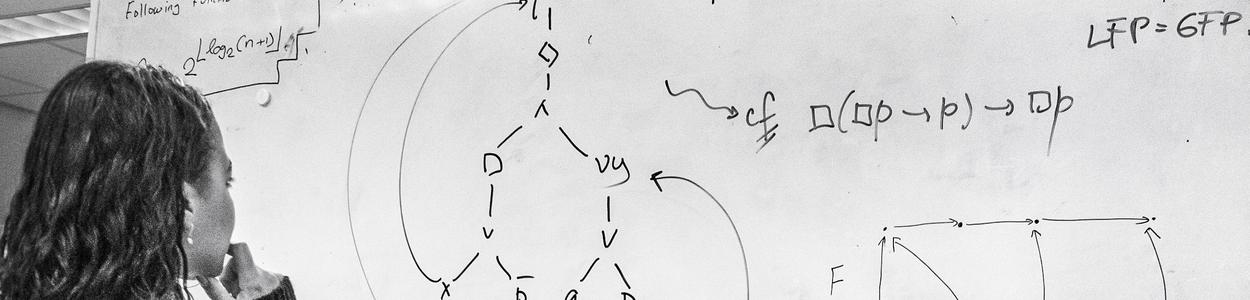

- Probabilistic context-free grammars (PCFGs) are widely used in natural language processing, with applications such as parsing and machine translation. Inference under PCFGs typically requires running a cubic-time algorithm (the CKY algorithm) either to compute expectations (necessary in learning) or to make decisions (e.g., finding a good parse). For large PCFGs, a complete run of CKY is prohibitively slow. The simplest approximate inference technique involves a pruned run of CKY which discards parses deemed unlikely early on in the search. However, arbitrary pruning introduces arbitrary bias, thereby harming learning (biased expectations) and inference (local optimum). In this project, you will deal with a different class of approximate inference methods, namely Monte Carlo (MC) and Markov chain Monte Carlo (MCMC) techniques. The principle is to simulate a complex target distribution and reason over a tractable statistical sample of the space of solutions.

- Organisation

- The project starts with a crash course on important algorithms for PCFGs:

- parsing algorithms such as CKY, and Earley parsing, particularly, their generalisations to weighted intersection;

- semiring parsing and Inside-Outside algorithm;

- decision rules (e.g., Viterbi, MBR).

The next phase of the project has the format of a reading group. First, we will discuss exact sampling from a PCFG (Chappelier et al, 2000) which can be done trivially provided an unpruned chart can be produced. We will then discuss two MCMC techniques that allow samples to be obtained without ever instantiating a complete chart (Bouchard-Cote et al, 2009; Blunsom and Cohn, 2010).

The final part of the project (which amounts to half the programme) involves coding in hackathon-style sessions. We will implement at least one of the MCMC techniques discussed in the reading group (depending on the number of participants we might implement both techniques) and move towards a novel importance sampler which addresses some of the issues with previous work.

- Prerequisites

- Interest in statistical parsing and machine translation, optimisation and sampling for large and complex discrete distributions. The ideal student will be comfortable with context-free grammars, dynamic programming and statistics. This project requires good Python programming skills.

- Assessment

- In order to pass, participants must attend all meetings (lectures, reading group, and hackaton) and contribute to a fruitful research experience. The final grade will be the geometric average of the following three components (uniformly weighted).

- Crash course: exercises and programming assignment.

- Reading group: presentation in which the student discusses one of the papers.

- Hackaton: quality and performance of code.